Goodbye, Siri? Apple Reveals “Vocal Shortcuts” are Coming to iOS 18

Apple has announced “Vocal Shortcuts” as part of a range of new accessibility features for the iPhone to be included in iOS 18. Meaning you can use voice commands to run tasks on your Apple device without saying “Siri” or “Hey Siri” first.

In recent years, Apple has revealed its latest accessibility enhancements for upcoming operating systems each May, coinciding with Global Accessibility Awareness Day, ahead of the June WWDC keynote, where they will be officially introduced. 2024 has been no exception, with a range of new features being unveiled.

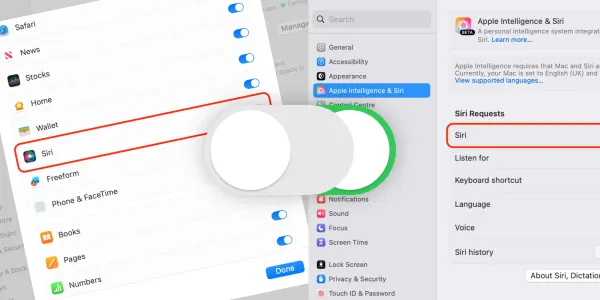

The most interesting Siri development, “Vocal Shortcuts” will allow users to assign custom phrases that Siri can understand to run shortcuts and launch multistep, complex tasks. Apple hasn’t released any details on how the feature works (that will likely come at the WWDC iOS 18 keynote), but in theory, it means you can ask Siri without asking Siri.

Lights on.

Message Mom “I’ll be there in ten minutes.”

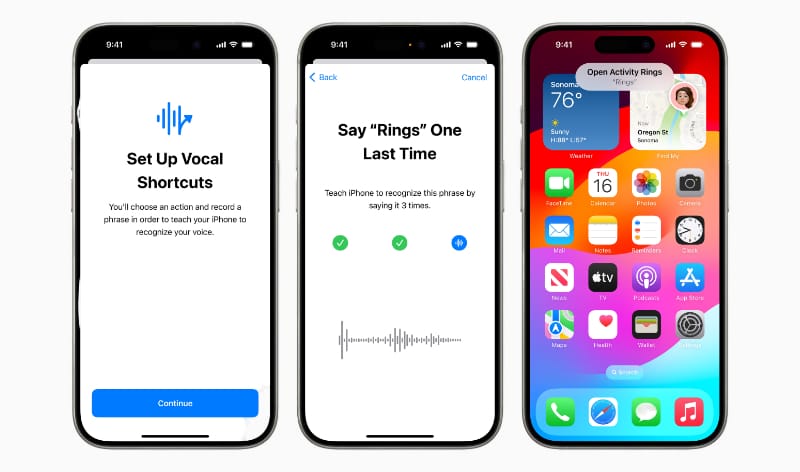

Based on Apple’s screenshots, a Vocal Shortcut is created by repeating a phrase three times; the device learns to recognize the command and execute the associated actions when the phrase is spoken.

It’ll be interesting to see how this feature works in daily usage when trigger phrases can easily be said in conversation.

Another new feature, “Listen for Atypical Speech,” gives users an option to enhance speech recognition for a broader range of speech. Listen for Atypical Speech uses on-device machine learning to recognize user speech patterns.

Apple says the feature is:

Designed for users with acquired or progressive conditions that affect speech, such as cerebral palsy, amyotrophic lateral sclerosis (ALS), or stroke, these features provide a new level of customization and control, building on features introduced in iOS 17 for users who are nonspeaking or at risk of losing their ability to speak.

Mark Hasegawa-Johnson, the Speech Accessibility Project at the Beckman Institute for Advanced Science and Technology at the University of Illinois Urbana-Champaign’s principal investigator, said: “Artificial intelligence has the potential to improve speech recognition for millions of people with atypical speech, so we are thrilled that Apple is bringing these new accessibility features to consumers.”

Hasegawa-Johnson continues, “The Speech Accessibility Project was designed as a broad-based, community-supported effort to help companies and universities make speech recognition more robust and effective, and Apple is among the accessibility advocates who made the Speech Accessibility Project possible.”

iOS 18 is expected to be released in the fall, coinciding with the launch of the next-generation iPhone.